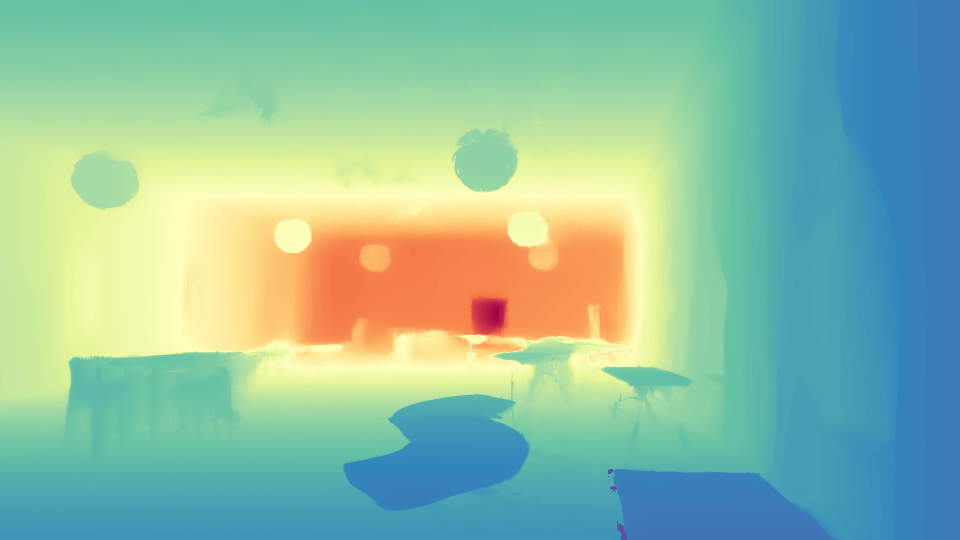

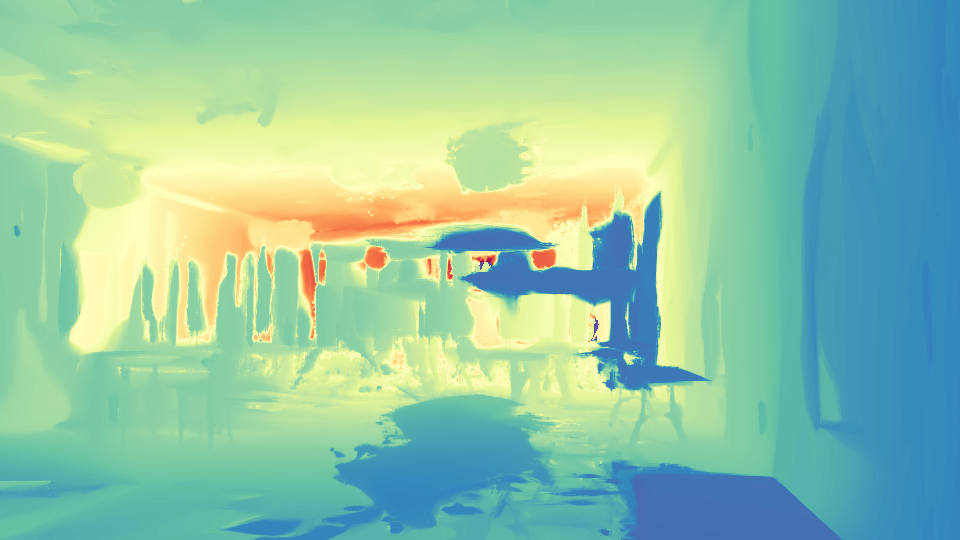

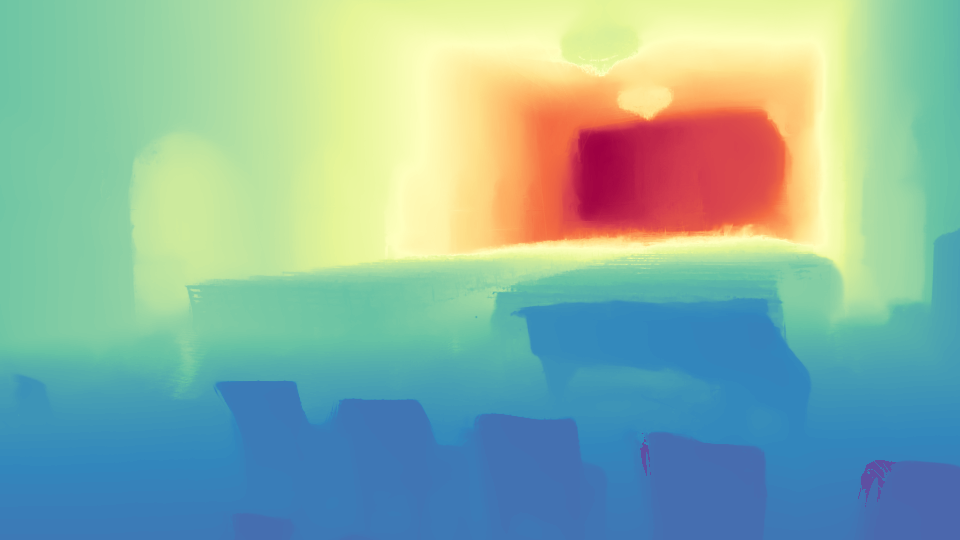

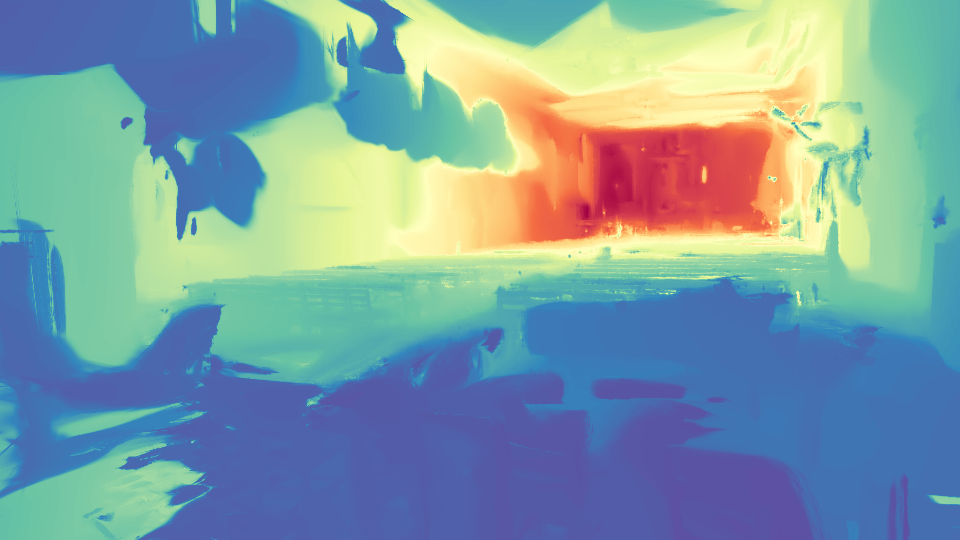

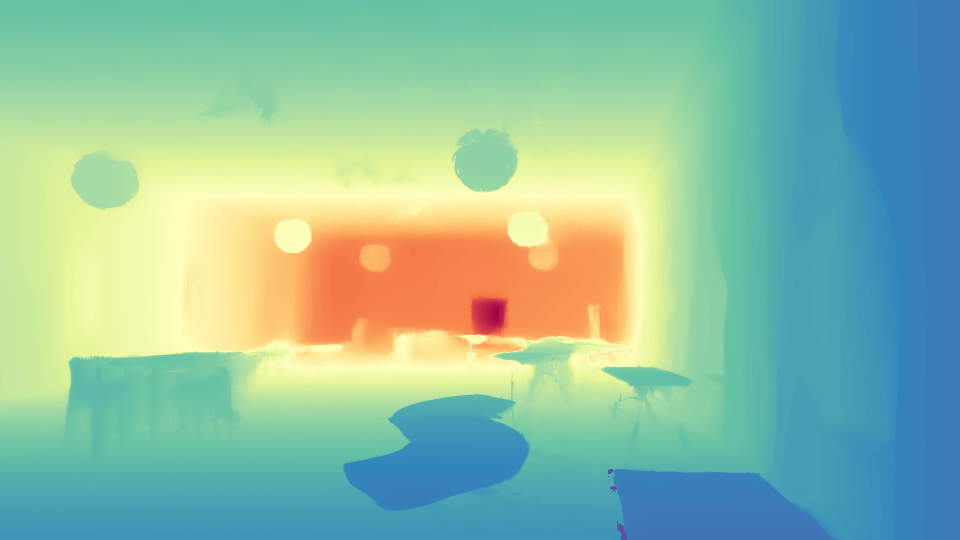

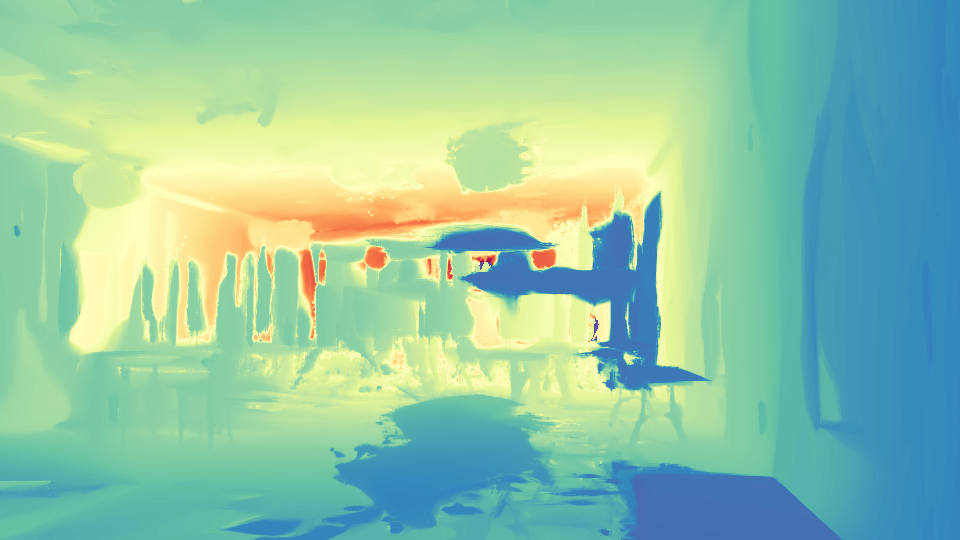

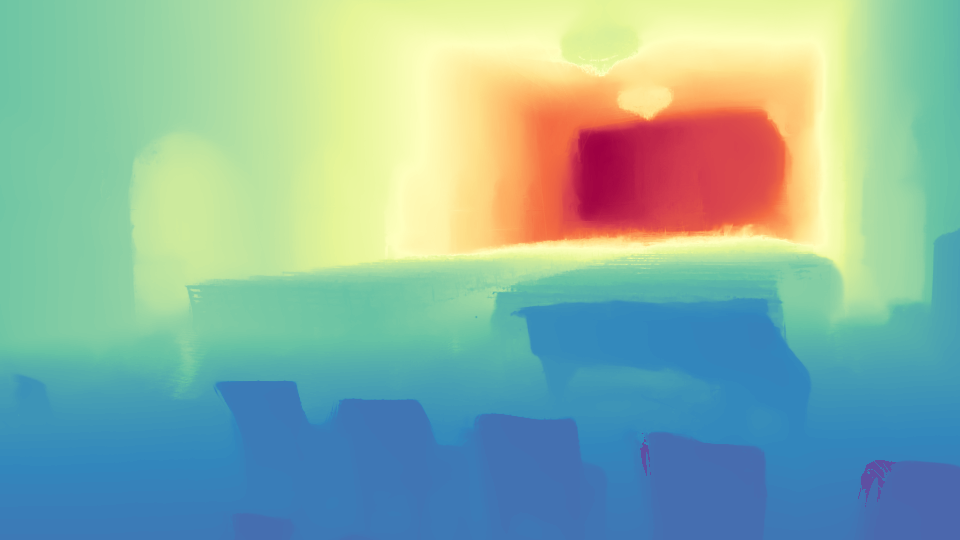

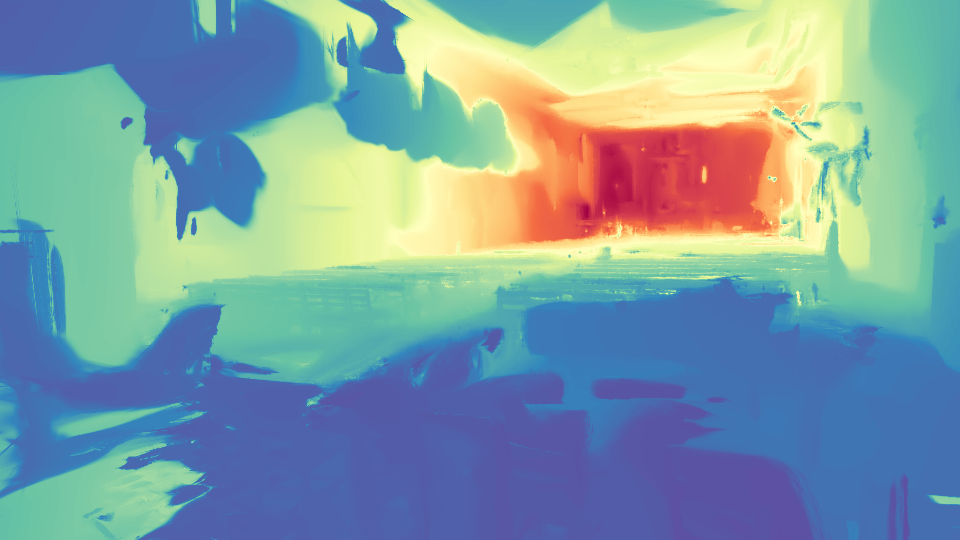

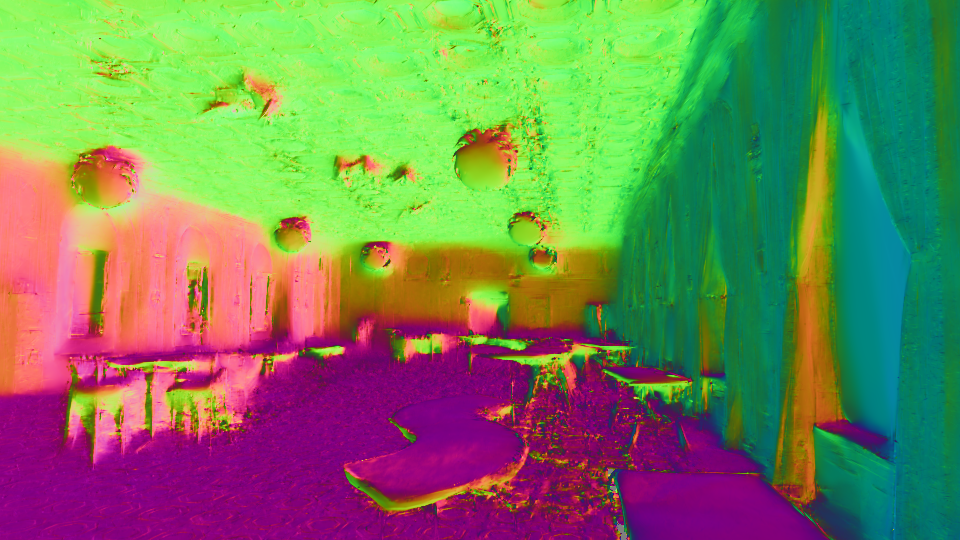

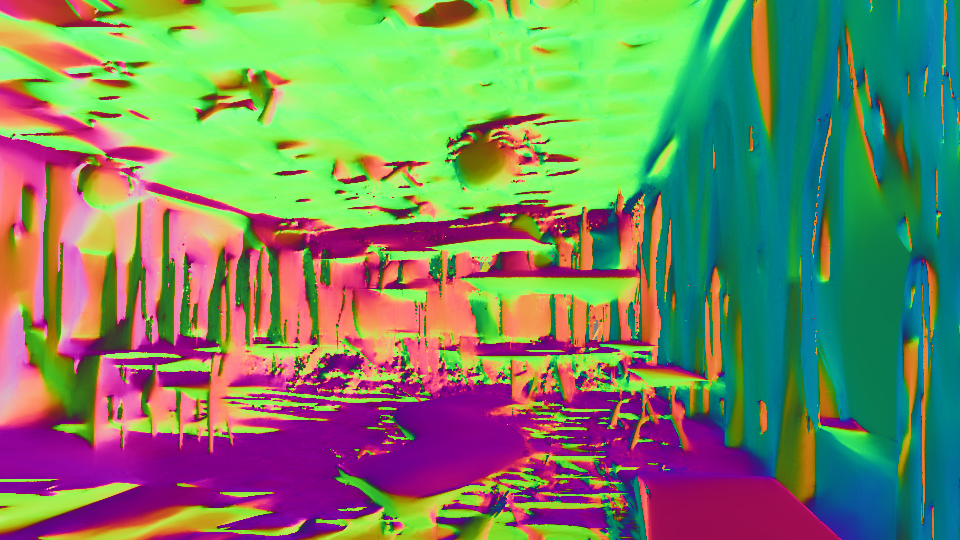

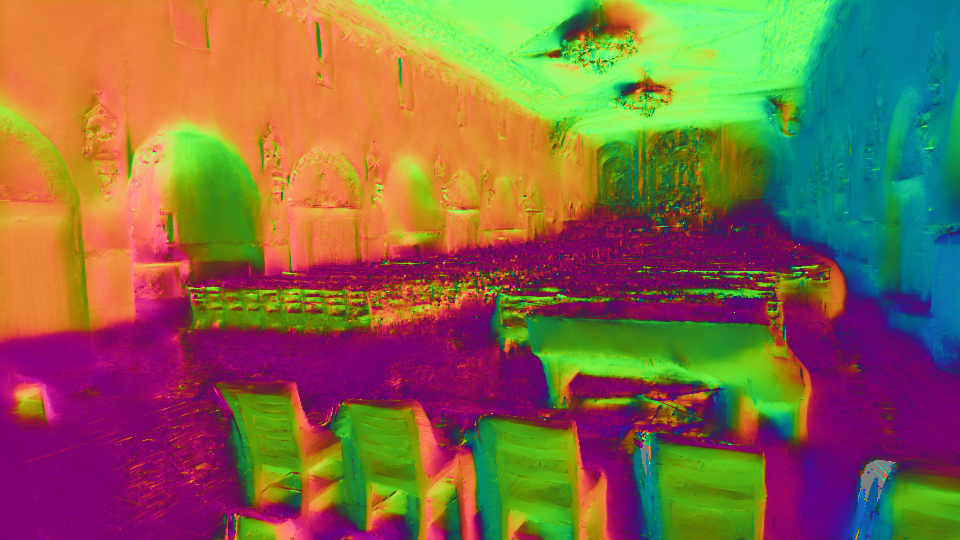

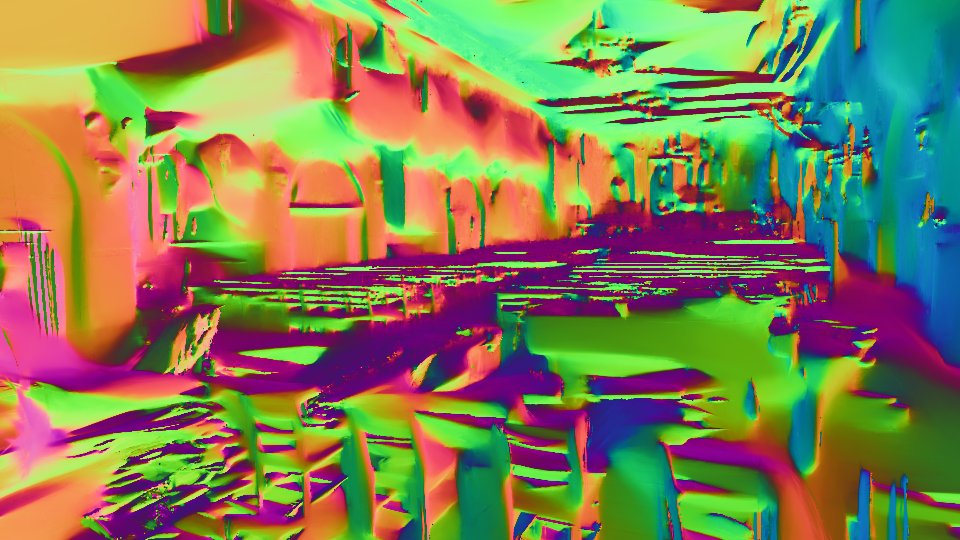

The results shown are derived from 3 training views. Our method estimates poses, which are then interpolated to render the video.

While neural 3D reconstruction has advanced substantially, its performance significantly degrades with sparse-view data, which limits its broader applicability, since SfM is often unreliable in sparse-view scenarios where feature matches are scarce. In this paper, we introduce InstantSplat, a novel approach for addressing sparse-view 3D scene reconstruction at lightning-fast speed. InstantSplat employs a self-supervised framework that optimizes 3D scene representation and camera poses by unprojecting 2D pixels into 3D space and aligning them using differentiable neural rendering. The optimization process is initialized with a large-scale trained geometric foundation model, which provides dense priors that yield initial points through model inference, after which we further optimize all scene parameters using photometric errors. To mitigate redundancy introduced by the prior model, we propose a co-visibility-based geometry initialization, and a Gaussian-based bundle adjustment is employed to rapidly adapt both the scene representation and camera parameters without relying on a complex adaptive density control process. Overall, InstantSplat is compatible with multiple point-based representations for view synthesis and surface reconstruction. It achieves an acceleration of over 30 times in reconstruction and improves visual quality (SSIM) from 0.3755 to 0.7624 compared to traditional SfM with 3D-GS.

Overall Framework. InstantSplat synergizes the geometry priors from the pre-trained MASt3R model and utilizes Gaussian Splatting to jointly optimize camera parameters and the 3D scenes.

InstantSplat rapidly reconstructs a dense 3D model from sparse-view images. Instead of relying on traditional Structure-from-Motion poses and the complex adaptive density control strategy of 3D-GS, which is fragile under sparse-view conditions, we propose to synergize the geometric foundation model (i.e., MASt3R) for dense yet less accurate initialization along with a fast alignment step to jointly optimize camera poses and the 3D scenes. It supports various 3D representations, including 2D-GS, 3D-GS, and Mip-Splatting.

@misc{fan2024instantsplat,

title={InstantSplat: Sparse-view Gaussian Splatting in Seconds},

author={Zhiwen Fan and Kairun Wen and Wenyan Cong and Kevin Wang and Jian Zhang and Xinghao Ding and Danfei Xu and Boris Ivanovic and Marco Pavone and Georgios Pavlakos and Zhangyang Wang and Yue Wang},

year={2024},

eprint={2403.20309},

archivePrefix={arXiv},

primaryClass={cs.CV}

}